|

I'm last-year PhD student at University of California, Irvine, working on Brain-Inspired Artificial Intelligence with Prof. Jeff Krichmar and Prof. Emre Neftci. Before that, I received the B.S. degree in Computer Science from Sichuan University. I was fortunate to work at Google Cloud AI as a software engineer intern and research scientist intern at Amazon. I'm interested in applying machine learning in real-world scenarios and have experience in reinforcement learning, natural language processing, computer vision, recommender systems, mobile robotics and neuromorphic computing. Email / Google Scholar / Github / LinkedIn |

|

|

|

|

Jinwei Xing, Takashi Nagata, Xinyun Zou, Emre Neftci, Jeffrey L. Krichmar Neural Networks, 2023 Conducting input gradient regularization along with policy distillation allows us to generate highly interpretable gradient-based saliency maps and produce more robust policies. |

|

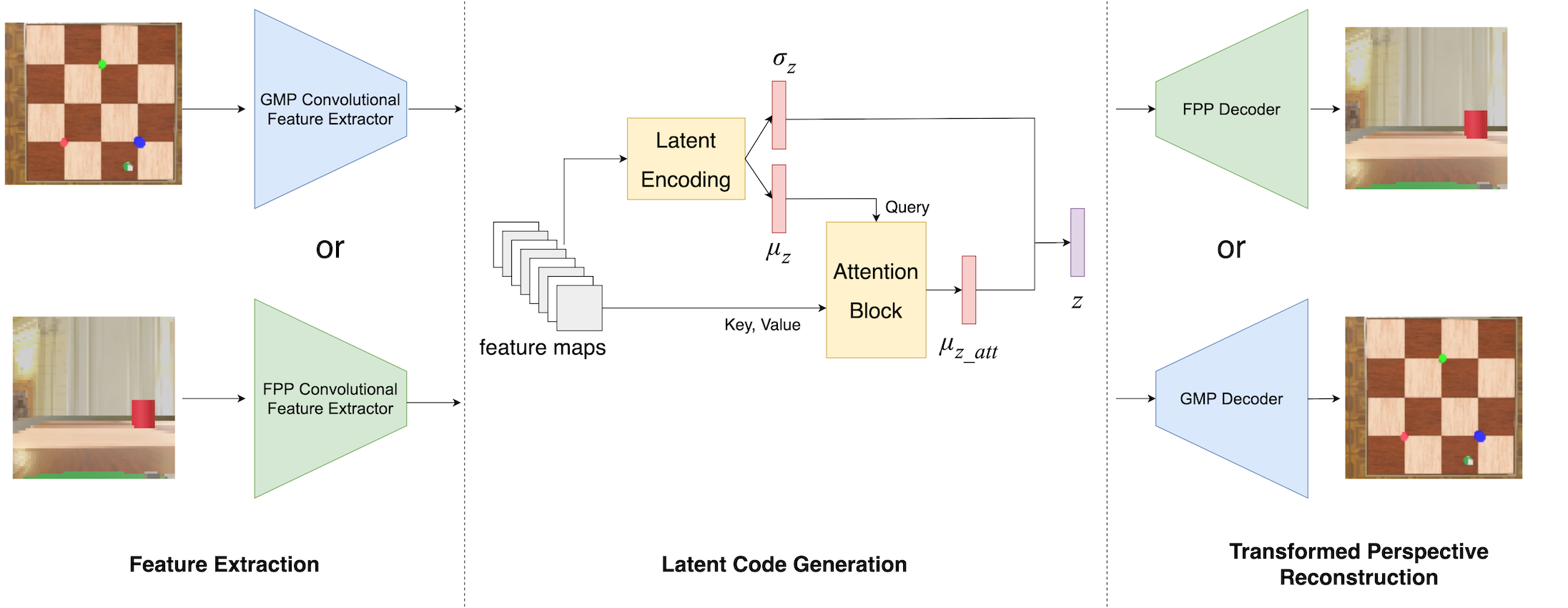

Jinwei Xing, Elizabeth R. Chrastil, Douglas A. Nitz, Jeffrey L. Krichmar PNAS, 2022 To investigate the underlying computations needed to implement the important cognitive function of linking between a first-person experience and a global map in our brain, we used variational autoencoders to reconstruct the top-down images from a robot’s camera view, and vice versa, and conducted analysis. |

|

Jinwei Xing, Takashi Nagata, Kexin Chen, Xinyun Zou, Emre Neftci, Jeffrey L. Krichmar AAAI, 2021 [code] By separating domain-general and domain-specifc information and conduct RL training based on domain-general embedding, RL policies could achieve almost zero performance loss when depolyed in new domains. |

|

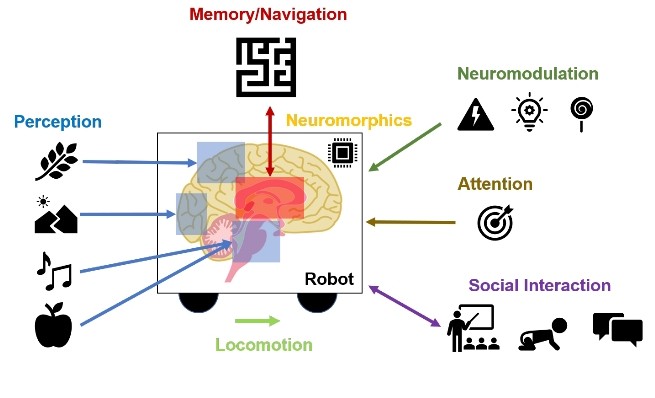

Stewart, Kenneth, Kexin Chen, Tiffany Hwu, Hirak J. Kashyap, Jeffrey L. Krichmar, Jinwei Xing, and Xinyun Zou Frontiers in Neurorobotics, 2020 Using neurorobots as a form of computational neuroethology can be a powerful methodology for understanding neuroscience, as well as for artificial intelligence and machine learning. |

|

Jinwei Xing, Xinyun Zou, Jeffrey L Krichmar IJCNN, 2020 Using semantic segmentation and deep Q learning to train a mobile robot that can drive in wilder environments. |

|

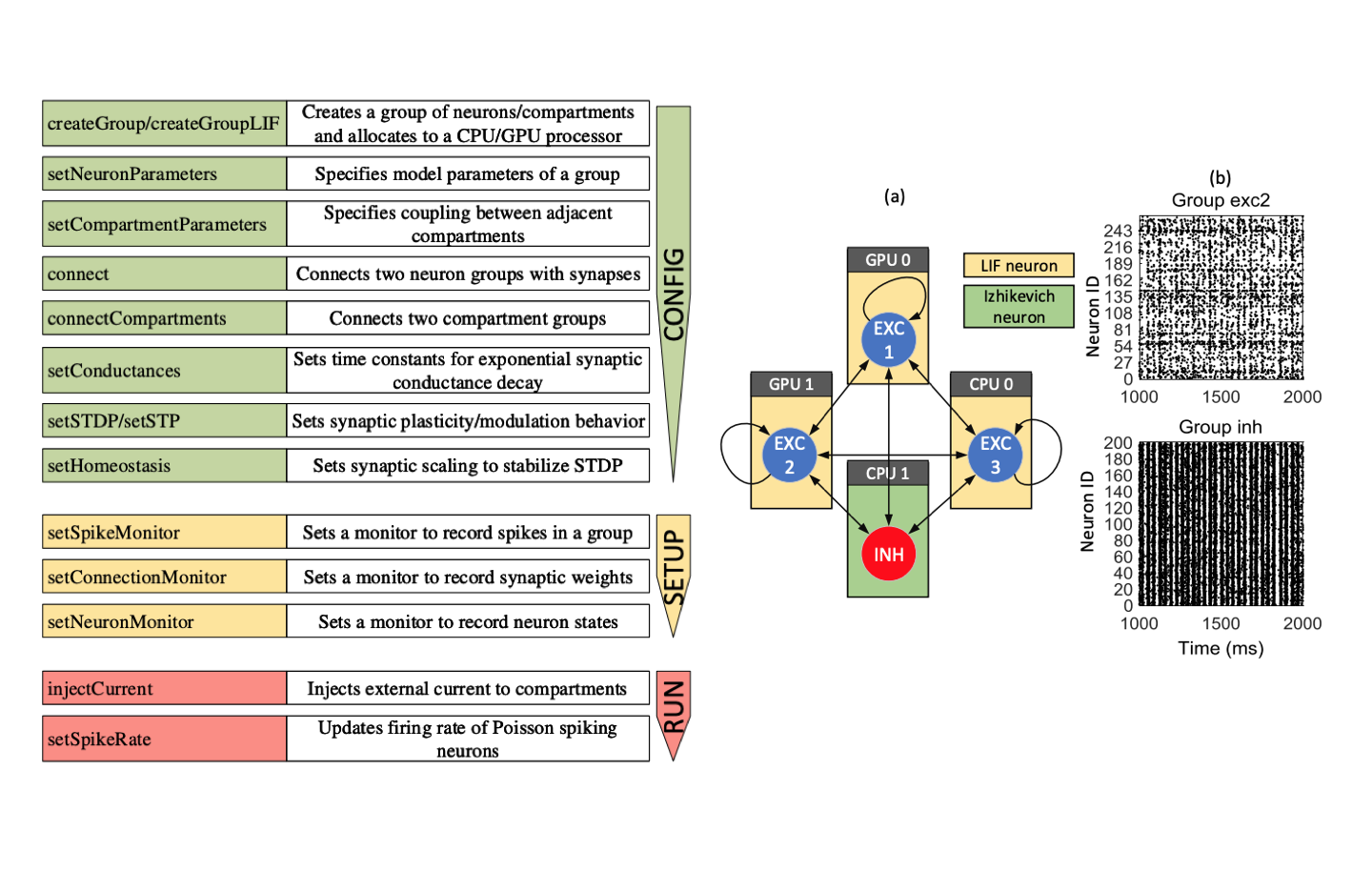

Ting-Shuo Chou, Hirak J Kashyap, Jinwei Xing , Stanislav Listopad, Emily L Rounds, Michael Beyeler, Nikil Dutt, Jeffrey L Krichmar IJCNN, 2018 CARLsim is an efficient, easy-to-use, GPU-accelerated library for simulating large-scale spiking neural network (SNN) models with a high degree of biological detail. |

|

|

|

[code] This project provides a modularized and unified implementation of a series of visual/image-based reinforcement learning algorithms (e.g. SAC, SAC+AE, CURL, RAD, DrQ and ATC) for continuous control tasks. |

|

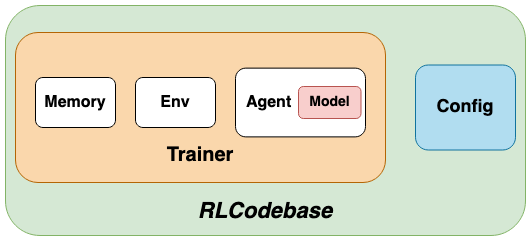

[code] RLCodebase is a modularized codebase for deep reinforcement learning algorithms based on PyTorch. This project aims to provide an user-friendly reinforcement learning codebase for beginners to get started and for researchers to try their ideas quickly and efficiently. |